[ith·nee·on]

noun, adjective, ith·ne·on, ith·ne·on·ic.

-noun

The fundamental particle of scientific self-importance. Literally, an acronym for “It has not escaped our notice”

Evidence of this particle is generally found near the end of articles in scientific journals, as a way to signal that the authors’ contribution to the field will shake the foundations of reality, utterly change all life as we know it, rip open the very fabric of the Universe itself, and forever establish the authors as veritable Gods, deserving of awed worship by every man, woman and child on the planet.

A single particle of ithneon suffices to convey this message with just the right tone of sincere humility.

Origins and preferred usage:

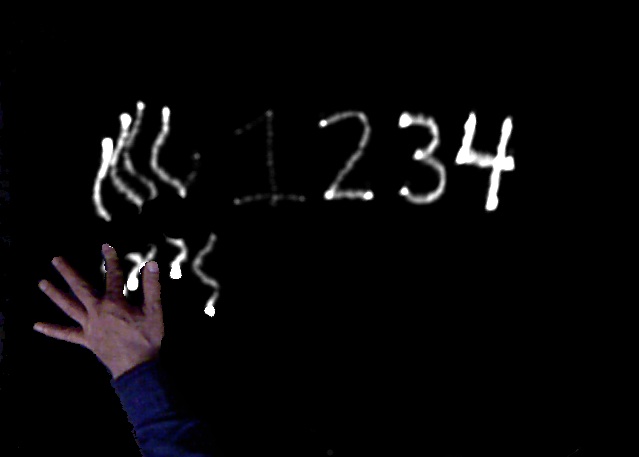

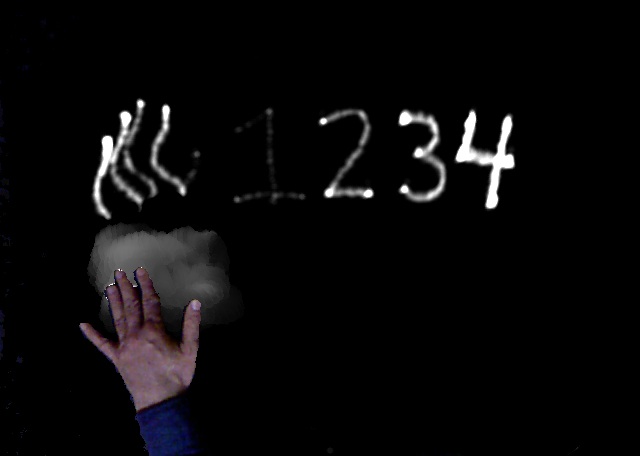

Ithneon was first discovered in an article by James Watson and Francis Crick proposing the double helical structure of DNA (Nature 171: 737-738 (1953)). Near the end of that article the authors state:

Since then, various ithneon particles have periodically been spotted. For example, near the end of a recent article by Dongying and Martin Wu, Aaron Halpern, Douglas Rusch, Shibu Yooseph, Marvin Frazier, Craig Venter and Jonathan Eisen, the authors state:

Similarly, near the end of a recent article in The American Scientist entitled “The Origin of Life”, James Trefil, Harold J. Morowitz and Eric Smith state:

Discussion:

Ithneon is clearly a powerful particle in Nature. Its trajectory is capable of describing, among other things:

• how all life replicates and evolves;

• a fourth fundamental branch of life we never even knew about;

• life on other planets.

Not bad for a particle.