Halloween eve in New York City, and the last day before my writing partner and I begin our November novel. In this pause between two projects, I have time to reflect with nostalgia on something we used to have in NYC. It’s not something everybody cares about, and I realize I’m going to sound hopelessly old fashioned in some circles for being so gauche as to mention this. But I can remember a time – not all that long ago really, when New York City actually had a democratically elected mayor.

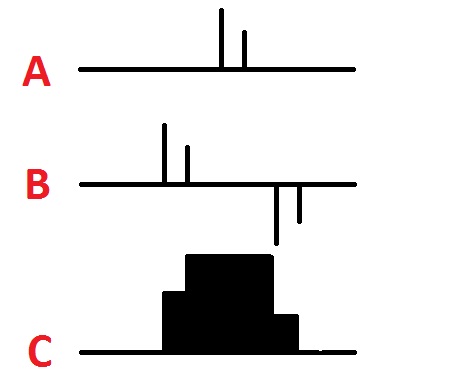

Now of course we have the illusion of an election. Everybody here is going through the motions, pretending it’s a real election, pretending that there is any doubt as to the outcome. But of course it’s not a real election. It’s like some poor sap being pushed into the ring with a raging gorilla, and told to fight a fair fight.

Well, almost like that. Except in this case, the gorilla weighs around sixteen times as much as his opponent.

And the odd thing is that none of this is about who is the better candidate. There are good things and bad things to say about both the incumbent mayor Mike Bloomberg and his challenger Bill Thompson. Each has done commendable things during his political tenure, and each has stumbled on occasion. But that’s not what this election is about, not even a little.

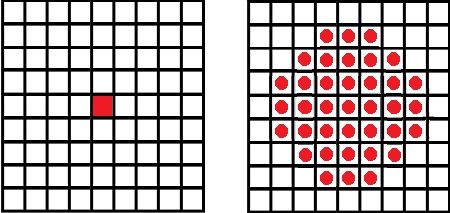

This election is about three hundred and fifty million dollars – around one third of a billion bucks. That’s how much our current Mayor, a billionaire worth around $17 billion, will have spent on his three runs for office by election day next Tuesday. By comparison, the Obama presidential campaign spent less than twice that much to reach an electorate approximately one hundred times larger.

Now don’t get me wrong. I’m not saying that’s a lot of money. On the contrary, it’s hardly anything at all – chump change really – if you are Mike Bloomberg and so happen to have $17 billion in the bank. By way of comparison, let’s say you were running for mayor, and you decided to self-finance your campaign. Suppose you had, say, $10,000 in your bank account (times being hard and all). Well, if you spent the same proportion of your personal wealth as our current mayor has this time around, the election would cost you less than a hundred bucks – about the cost of a nice dinner for two in Manhattan, if you order wine and dessert, and don’t have to pay for parking.

So basically all our mayor is doing, in terms of his own personal spending, is going out for dinner with a lady friend and maybe getting a nice merlot and the blueberry pie. He’s not even taking the car.

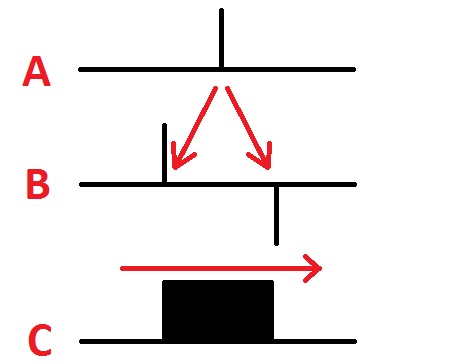

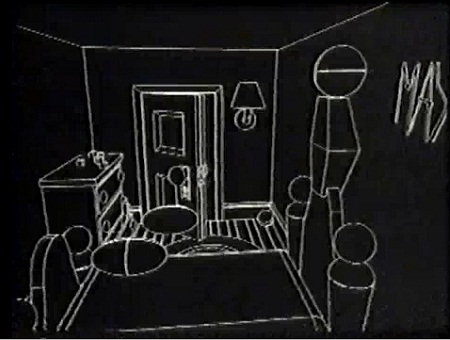

But from the point of view of us ordinary mortals the situation is quite different. Bloomberg has top ad agencies, production companies, store fronts in Manhattan filled with teams of campaign workers, the services of the best professionals money can buy, all working around the clock, all focused on trying to discredit Bill Thompson. Almost every day I get a fancy flyer in my mailbox from the Bloomberg reelection campaign. And these are no ordinary flyers. They are like nothing you’ve ever seen before in an election. The production quality on these things makes even the polished Obama campaign literature look like it was hand cranked on a used mimeograph machine by some sweaty old guy in a basement.

Somewhere there are suppliers of fancy paper, exotic inks, custom illustrations and high class glossy photography, as well as an entire Letterman-show full of writers, who are thriving despite the bad economy, just to make those flyers that keep landing in my mailbox. And every one of these lovely flyers does the same thing – attack Bill Thompson with the intensity of a pack of feral dogs ripping into a downed calf.

I’m starting to wonder whether Bill Thompson isn’t actually some sort of saint – a holy man with angel wings and the moral discipline of a Mahatma Gandhi. Otherwise, by now we would surely all be convinced the man was a raving pornographic child molester, given the sheer volume of vitriol being hurled at him by the Bloomberg campaign.

Don’t get me wrong. Our incumbent mayor has achieved some fine things at City Hall. But this is crazy. The Thompson campaign is completely outgunned, shouted down at every turn by Bloomberg’s shockingly over-financed operation. The challenger is unable to get any message at all out to the voters. Anything he might have to say has been overwhelmed by the solid wall of media blitz that is the Bloomberg campaign.

No, Mr. Bloomberg is not breaking any laws by doing this. The fault lies with our election laws, which are so screwed up that they indeed allow wealthy people to buy elections. And to be fair, it wouldn’t work if Bloomberg were an atrocious mayor. But nonetheless, this is not an election about the merits – it is not about which of the two candidates is better. That question has been effectively buried under an avalanche of lopsided spending. This election is about one thing: a sixteen to one spending ratio.

And so I find myself asking the following question: If you believe in the idea of fair elections, can you vote for someone who is deliberately, ostentatiously subverting the process? And if you were to pull the lever for that guy, knowing he was effectively buying your vote, could you still tell yourself that you live in a democracy?