I was having a conversation with a colleague and the phrase “transparent process” came up. It’s a great phrase, and it strikes to the heart of some interesting cultural questions.

For example, why is there a rich general shared culture of music, or of cooking, or of gardening or acting or writing, but not so much of computer programming or architecture? There are many answers to this question, but I suspect at least part of it has to do with transparent process.

The process of getting into music or cooking or gardening or acting or writing — and of many other crafts and skills as well — is quite transparent. Even a beginning musician or writer understands the basic process, and is able to perceive and absorb the ideas of advanced practitioners.

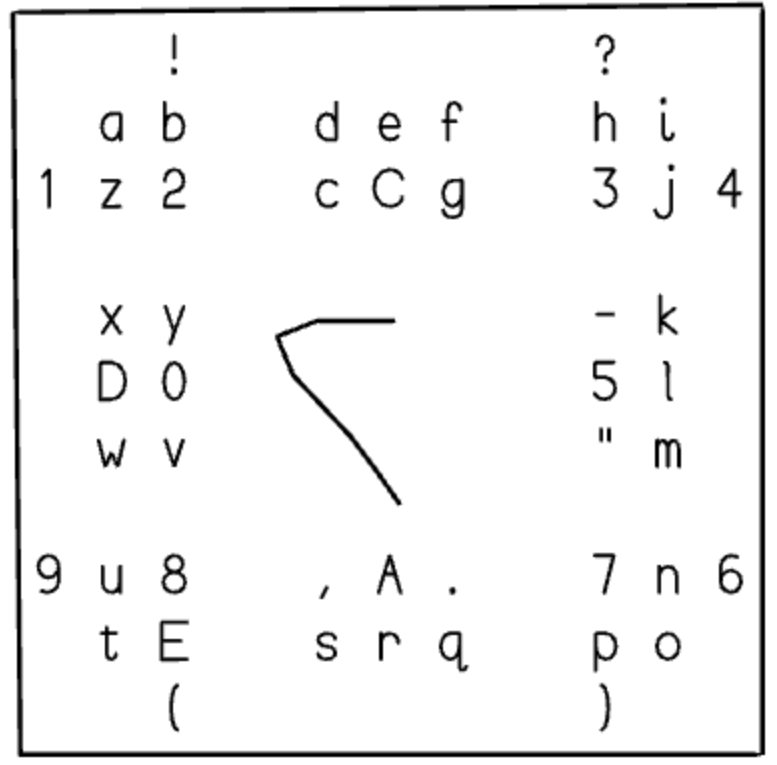

Yet many fields — particularly those we think of as the “technical fields” — don’t seem to offer this level of transparency. Most people can pick out a melody on a musical keyboard, yet most people cannot write even the simplest of computer programs.

This is not for lack of trying. There have been many attempts to create a transparent onboarding process for budding programmers. And yet it is arguable that these efforts have failed, at least in comparison with efforts to show that “anybody can cook” or “anybody can play the piano”.

I wonder whether this is due to an inherent opacity somewhere in the process of learning the so-called “technical fields”, or to cultural bias. Or perhaps it is due to something else entirely.