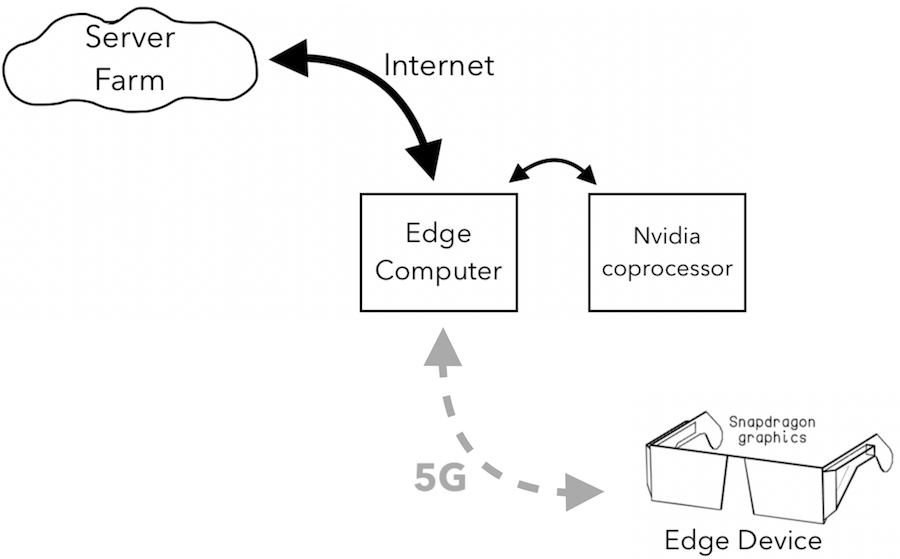

Here is the image I have in my mind of what an early version of “two edge computing” might look like:

Let’s say you’re wearing some brand or other of SmartGlasses (not quite on sale yet, but coming soon). On the far side of the Edge, your wearable device isn’t going to have enough battery power for super-duper graphics.

So it will use the equivalent of a SnapDragon processor like the one that’s probably in your current SmartPhone. Such processors are specifically designed to work in the low power environment of tiny portable computers.

Somewhere nearby, within easy reach of your 5G wireless connection, will be the Near Edge, in the form of a honking big computer, such as a high end PC. This computer will have a powerful — and power-hungry — co-processor, perhaps an Nvidia processor, which can crunch machine learning computations far faster than anything your wearable could do.

If you hold your hand up in front of your face, your Far Edge wearable device will have enough computational power to realize it is looking at a 3D object. But all it will really be able to do with that information is find outlines and contours, which it will send as bursts of highly compressed data to your Near Edge computer.

That’s where you Near Edge computer’s co-processor will get to work: It will recognize that the object being seen is your hand, figure out the pose and the underlying skeleton, and send that data back to your wearable, also in the form of bursts of highly compressed data.

To you the process will appear seamless: Your hand is now a super-powered controller, able to interact with the augmented world around you in precise and intricate ways.

But that’s just half of the story — the Far Edge and the Near Edge working together. What about the Cloud itself? More on that tomorrow.